Why?

Majority of the tutorials I've seen on convolutional neural networks either focus on providing a basic analogy or going straight into describing terminology. Therefore, I aim to start with an overview of the stages involved in CNN's (Convolutional Neural Networks) and then provide an analogy, as well as a small glossary of key and external resources for further assistance. Make sure to utilize the glossary to understand key terms used throughout the blog post to help understand the material and continue onwards to a few other articles or video's mentioned in my resources section! By the way, don't expect to completely understand CNN's straight away, as they ain't all too simple!

Note that I'll be providing tangible/practical code in another one of my problem-solution blog post (where I take a problem I've had and explain my final derived solution, along with how I've overcome some major hurdles).

Key Stages?

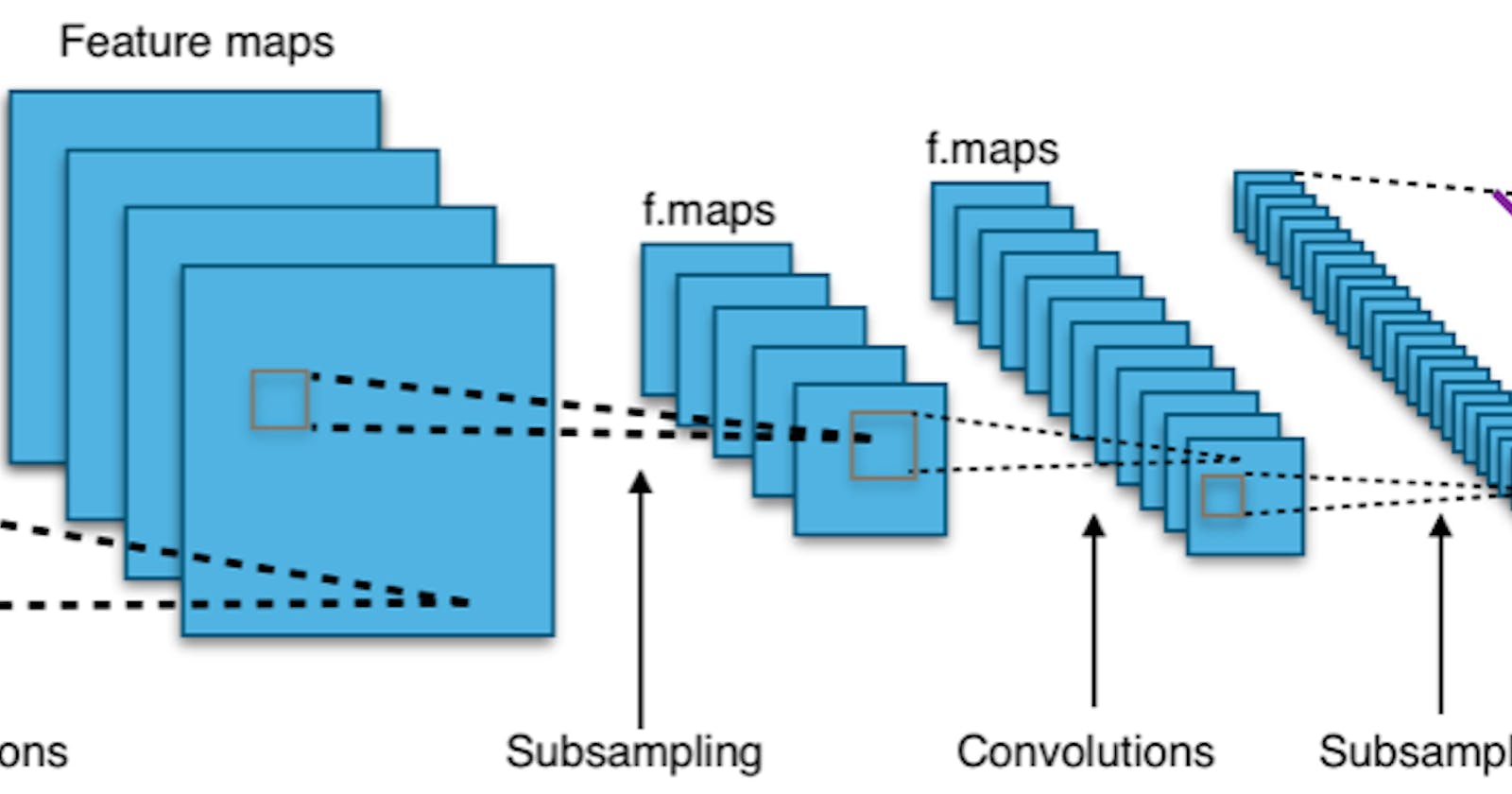

- Convolutional Layers (extract features from the input)

- Filters (matrices of weights) convolve over the input to produce feature maps

- When the filter and input are similar, a high number is produced

- Applying ReLu functions to increase non-linearity

- Filters (matrices of weights) convolve over the input to produce feature maps

- Pooling/Down Sampling (combine clusters of neurons together) to reduce dimensionality

- Flattens clusters of neurons into one-long vectors

- Fully Connected Layers (connect a neuron to all those in the next layer)

Analogy?

You probably won't understand the above descriptions straight away, however a worded example feel more intuitive (to ease the confusion)!

So humor me and imagine the following scenario:

- You're given a few hundred paintings and need to identify which picture corresponds to which shape

- None of the paintings are too precise, and the grid they were painted onto is HUGE (so there's no use just trying every single all combinations in a fully connected artificial neural network)

- You notice that each picture/painting is composed of smaller, more subtle, strokes (lines), which form curves, which themselves create each shape's final outline

This might sound insane (are we like 2?), but more complicated and meaningful problems can be solved in the exact same way!

The process of segregating/labelling images begins with realizing that you can't comprehend a full picture at once, so you must break each down into smaller 2x2 squares. You can move across, with a stride of 2 pixels at a time and compare these squares against a few filters. The filters themselves are just another grid, which resembles unique features which may be present in the original input image. Example basic features are like lines in different directions:

- Horizontal

- Vertical

- Diagonal from left to right (upwards)

- Diagonal from right to left (downwards)

Through convolving from one mini-image (receptive field) to the next, documenting how similar each receptive and filter grids are, a smaller down sampled image can form (this is pooling). The aim of this gradual comparative process is to form a more abstract, higher level image composed of lines instead of individual pixels!

Now the enlightening idea is that you can apply the same down sampling process used to extract lines from pixels, to find curves in lines, and then shapes formed from the curves! Each of these stages form separate layers of your neural network and are separated by activation functions (for accentuating non-linearity) and fully connected layers (to join together the different patters and eventually produce a final output)!

Glossary?

| Term | Definition |

| CNN | Convolutional Neural Network |

| Kernel/Channel | A matrix of weights used to produce a feature map by convolving over the input (note that multiple can be used to preserve spacial depth to a higher extent) |

| Filter | A set of kernel/channel\'s |

| Convolving | Moving through a broken down version of the input, summing the input values in each section and multiplying them by the filter/kernel |

| Activation/Feature Map | Original input processed by filters to accentuate certain features (effectively performs operations like edge detection, blur, ect) |

| Stride | The number of divisions of the input to scroll across at a time |

| Receptive Field | Part of the input which the filter\'s scrolling over |

| Padding | Adding zeroes (i.e. zero-padding) to the input or dropping part of it (valid-padding), to mitigate the impact of the stride not perfectly dividing the input (e.g. there is a remainder) |

Places to LEARN MORE?

I've come across several amazing blogs and videos describing how convolutional neural networks work, so here's a rough list of the one's I feel are the most valuable!

- MIT 6.S191: Convolutional Neural Networks is probably the most wholesome and complete (to a small extent) video on CNN's

- A Beginner's Guide To Understanding Convolutional Neural Networks is just amazing, I wish I read this one first

- Goes through a few details about how the filters actually work which no other guide did

- A friendly introduction to Convolutional Neural Networks and Image Recognition has the most easily interpretable example situations

- The example scenario's build from extremely simple to slightly more complex

- The Complete Beginner’s Guide to Deep Learning: Convolutional Neural Networks and Image Classification has amazing visuals

- Understanding of Convolutional Neural Network (CNN) — Deep Learning is well broken down into sections

- Intuitively Understanding Convolutions for Deep Learning is good for consolidation after the rough ideas behind CNN's are understood

- However, it's quite confusing at times (especially for a first read)

- Neural Network that Changes Everything is from Computerphile and is a great first deep dive into the ideas behind CNN's

- Do note that they have other video's on these topics, however this seems like their best introduction

Cover image sourced from here

THANKS FOR READING!

Now that you've heard me ramble, I'd like to thank you for taking the time to read through my blog (or skipping to the end).